In celebration of International Women’s Day, Google Hong Kong held its annual Google Women Techmakers Program on 13 April 2019. SearchGuru joined this day-long event featuring speakers, panel discussions, hands-on workshops, and networking, all centred on celebrating the contributions of women in tech.

Led by Emma Wong, the organiser of Google Developer Group & Women Techmakers, the Actions on Google workshop guided all participants in enhancing Google Assistant by applying the best-in-class language processing, machine learning capabilities and tools from Google to their projects.

Why is Google Assistant so popular?

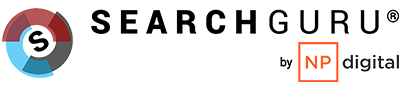

Google Assistant is on many devices including Android, iOS, PCs, TVs, cars, headphones, watches and speakers. It is now on over 500 million devices, as announced by Scott Huffman, Google VP of Google Assistant last year, and they’re aiming to reach 1 billion devices this year.

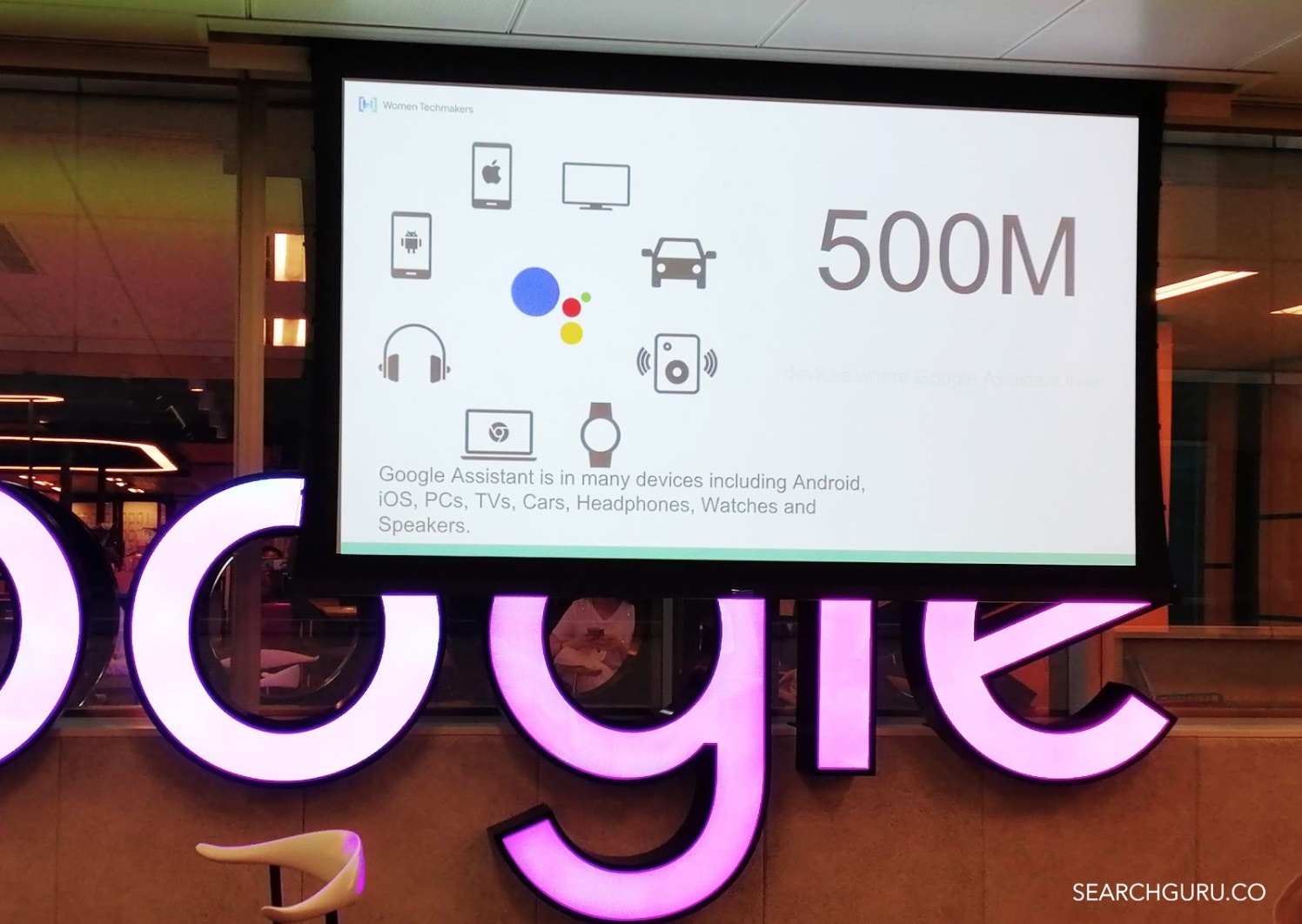

AI-powered Google Assistant supports different surface capabilities, mainly Audio Output (speakers, earphones, smart assistant, smartphones) and Screen Output (smartphones, TV, computer, laptop screens).

Does Google Assistant speak your language?

A growing audience from 22 different languages, apart from English, Google Assistant also serves in Korean, Thai, Indonesian, Hindi, Portuguese, Spanish and more. In fact, the very first Traditional Chinese language for Taiwanese was launched on 26 Oct 2018. The Cantonese language catering to Hong Kong citizens is expected to launch by this year.

What are the three major components in the Google Assistant ecosystem?

1. Google Assistant: A conversation between you and Google that helps you get things done in your world.

2. Actions on Google (AoG)

An open platform for developers to extend the Google Assistant functionality.

3. Devices with Google Assistant Built-in

These include voice-activated speakers such as Google Home, Android phones, iPhones and more.

The 1 million Actions that feed the world’s voice queries

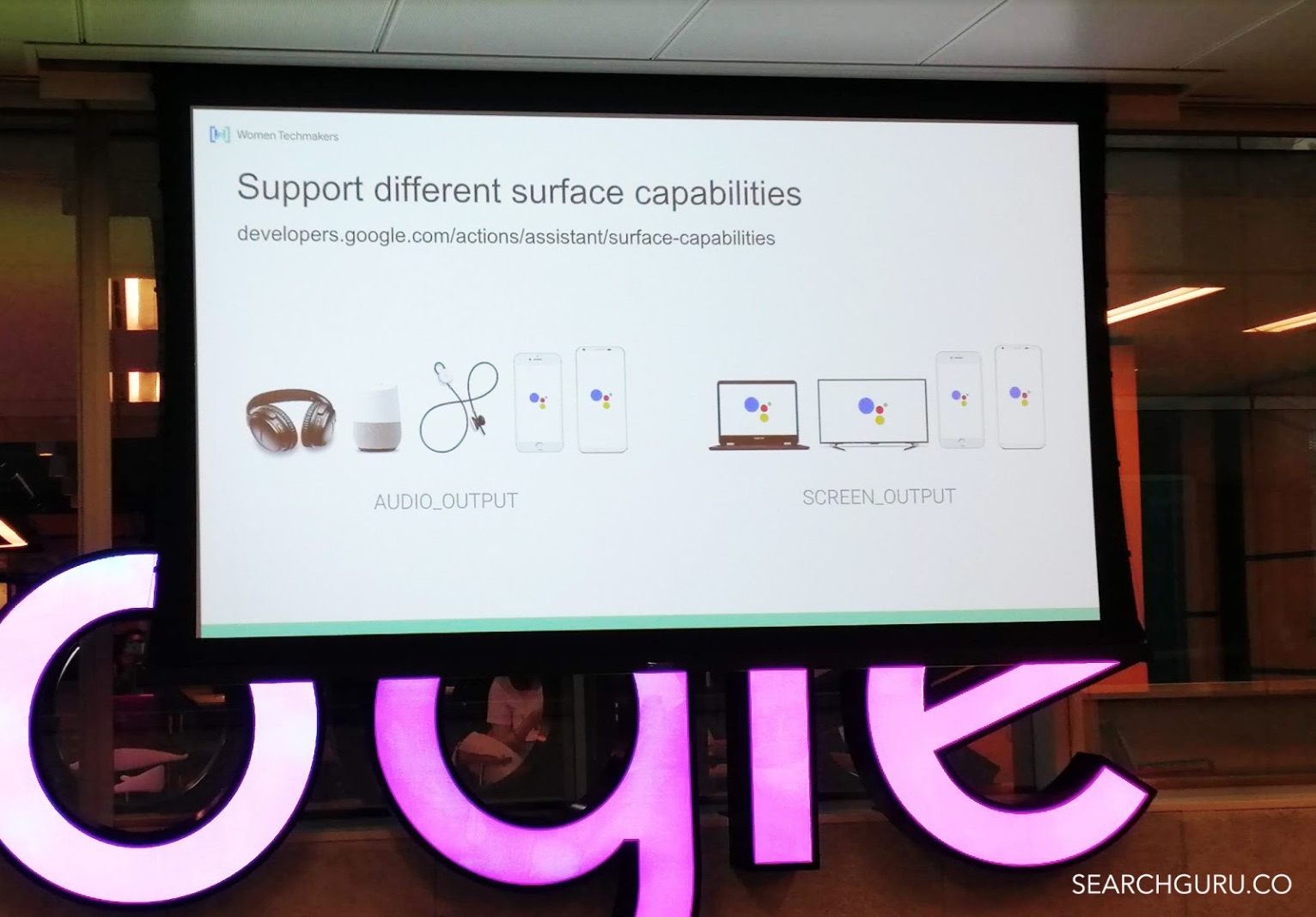

The photo below depicts Google Assistant receiving the User Input [the Intention] and transferring to [Action database] via a conversation API request.

[Action database] helps Google Assistant to fulfil the request through conversation API response to the User.

Generally, we can tell Google Assistant to book a ride, shop or buy a product from an online store, book a restaurant/hotel room and more. Did you know, there are 1 million actions available for you to try out?

Is it always a dream to talk to your favourite celebrity? In the USA, you can ask Google Assistant to talk like John Legend. Just say “Hey Google, talk like a Legend” to get started.

How Google Assistant Process Natural Language?

The flow chart below explains the mechanism of Google Assistant processing the natural language input from a user. If you are working on SEO for Voice Search, you will notice the importance of Knowledge Graph which Google Assistant uses to return the user’s queries. Rich snippets, HTML tagging, Q&A content, facts and figures contributing to the Google Knowledge Graph.

The 6 cardinal roles include User’s Command > Google Assistant (speech to text natural language processing) > Google Dialogflow > Google Cloud Platform > Action Database. Developers train Google Assistant to take high accuracy action that reacts to natural language seamlessly.

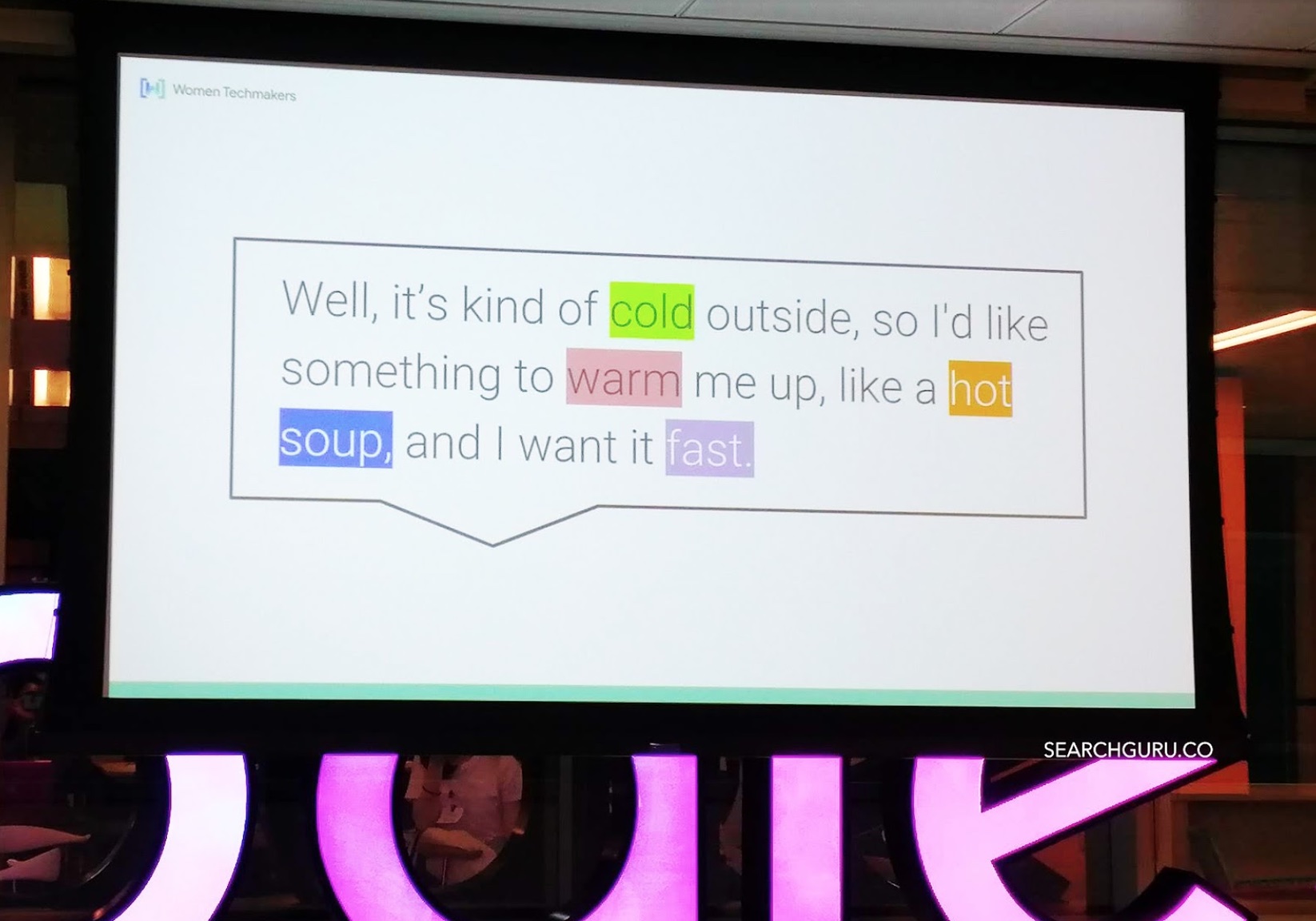

How does Google Assistant understand the context from natural language?

Google Assistant’s speech-to-text technology captures keywords from a natural language paragraph and relates them into a specific action.

How Developers empower Google Assistant’s machine learning?

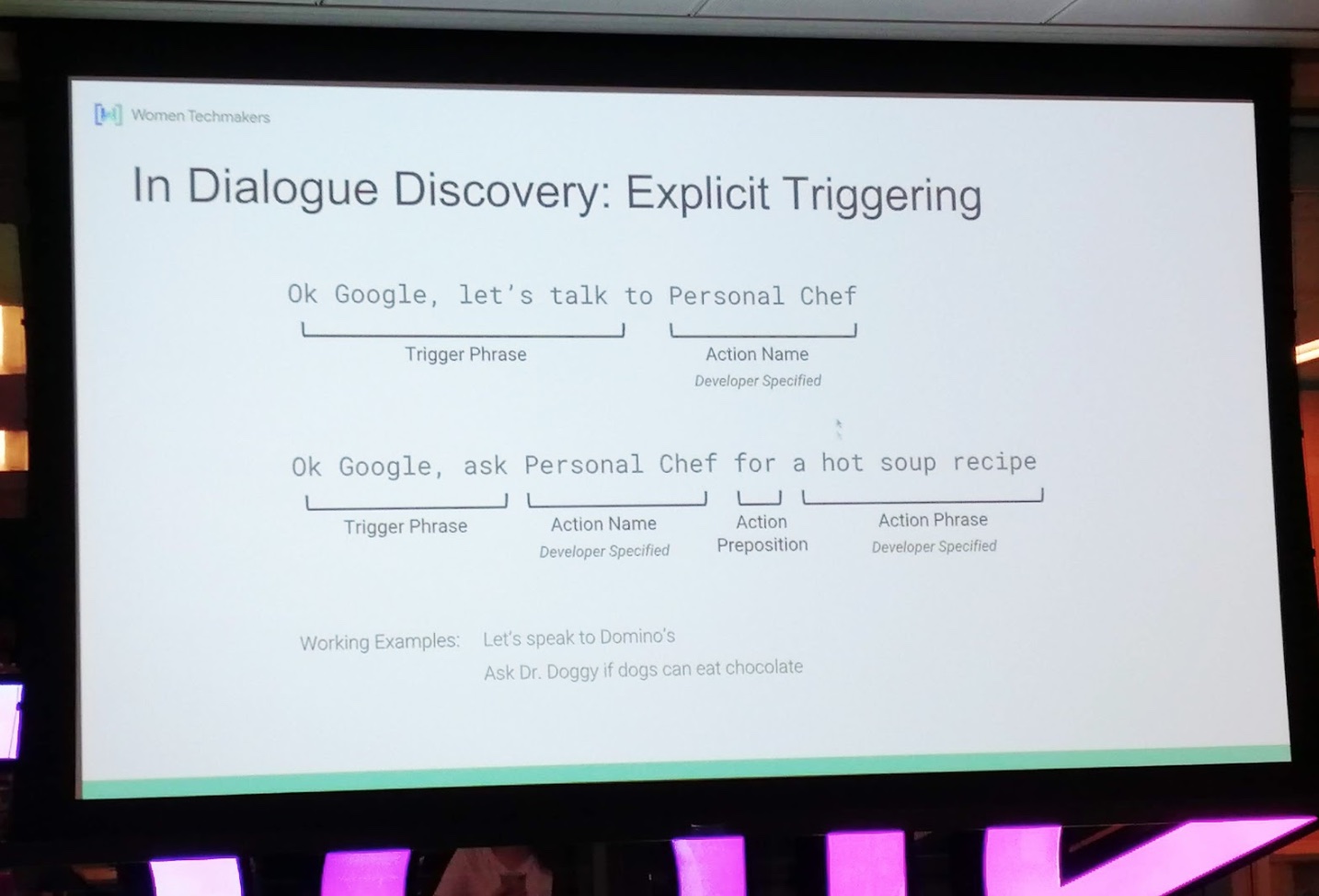

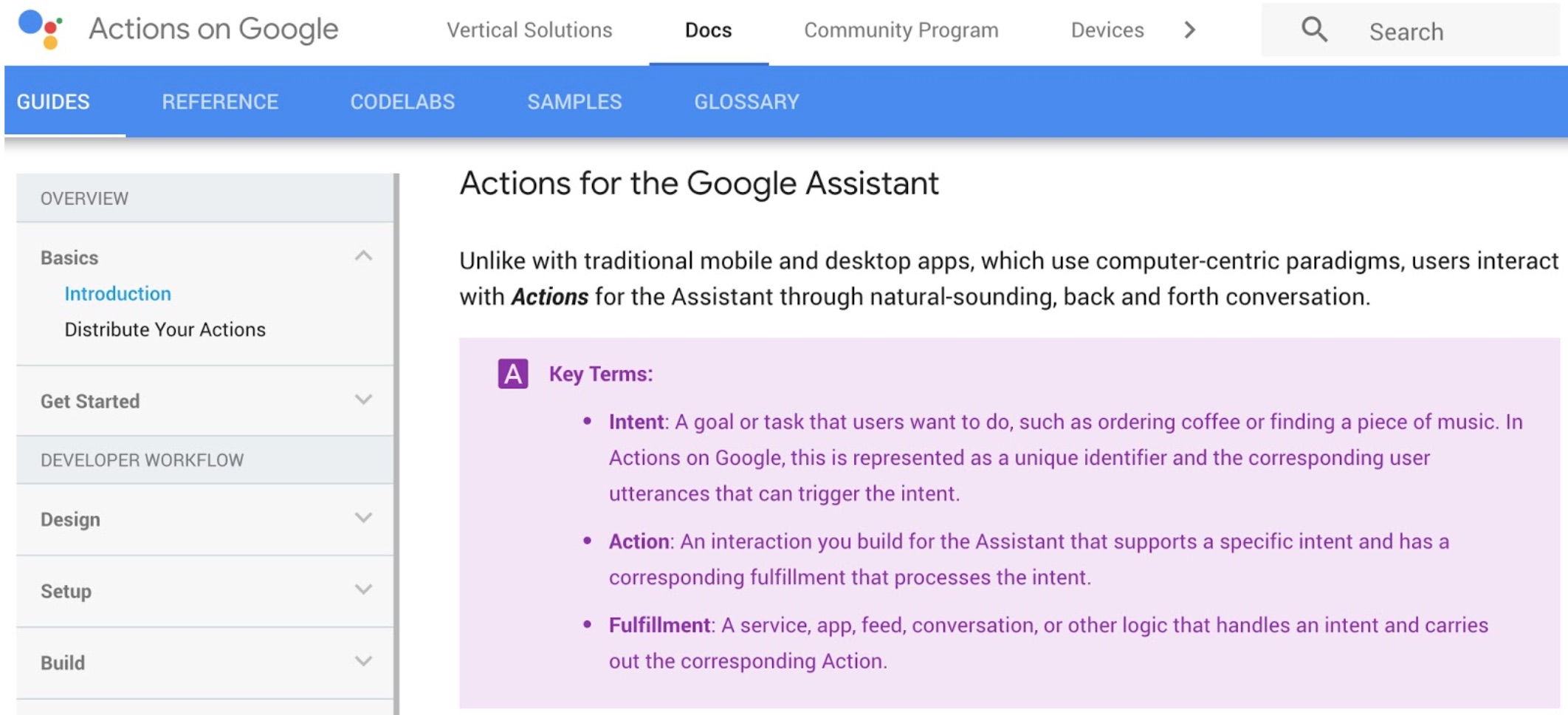

A machine’s intelligence would be very limited without better control from the developers. To make Google Assistant smarter in understanding human languages and unique intents, Actions on Google (AoG) (a free, powerful platform) is created to let the public create software, extending the functionality of Google Assistant.

In the realm of AI, Google Assistant goes beyond setting an alarm clock, making a call or booking a ride, developers can access Actions on Google to easily create and manage interesting and effective conversational experiences between users and your own 3rd-party fulfilment service. For instance, this might allow the user to order a pack of seafood Nasi Lemak with Teh Tarik breakfast from your app via Google Assistant. See this success case of ordering Domino Pizza via Google Home.

How Developers train Google Assistant to understand Natural Language?

Have you ever wondered how the Assistant parses the semantic meaning of user input, especially voice speech? Google’s software known as Natural Language Understanding (NLU) helps Assistant to recognize words in speech.

If you are customizing your own Actions (such as fulfilling a Nasi Lemak order by a Cantonese user), Google offers a service named Dialogflow to let you handle NLU effectively. Dialogflow simplifies the task of understanding user input, extracting keywords and phrases from the input, and returning responses. You can define how all this works within a Dialogflow agent.

How to start building Actions for the Google Assistant?

Be part of the AI machine learning! Let’s train the Google Assistant for the first time is fully guided with the Codelabs platform detailed instruction.

What you’ll learn:

- Understand how Actions work.

- How to create a project in the Actions Console.

- How to create a Dialogflow agent.

- How to define training phrases in Dialogflow.

- How to test your Action in the Actions Console Simulator.

- How to use Dialogflow slot-filling and system entities.

- How to implement Action fulfilment using the Action on Google client library and the Dialogflow inline editor.

Learn more about how Google Assistant can enrich your lifestyle.